Overview

Neo-Nazi, white supremacist, and far-right groups and online businesses maintain a presence on Facebook. Facebook is the third most visited website on the Internet and is also the world’s largest social media network, with over 2.2 billion regular users as of February 2018.“Facebook.com Traffic Statistics,” Alexa Internet, Inc., accessed January 25, 2019, https://www.alexa.com/siteinfo/facebook.com; Siva Vaidhyanathan, “The panic over Facebook's stock is absurd. It's simply too big to fail,” Guardian (London), July 27, 2018, https://www.theguardian.com/technology/2018/jul/27/facebook-stock-plunge-panic-absurd-too-big-too-fail. According to the Pew Research Center, 68% of U.S. adults use Facebook, with 81% of people between the ages of 18 and 29 using the platform.“Social Media Fact Sheet,” Pew Research Center, February 5, 2018, http://www.pewinternet.org/fact-sheet/social-media. Because of its popularity, Facebook has become an important tool for political or community organizations and commercial brands—including, unfortunately, for far-right extremists.

Even though the company explicitly bans hate speech and hate groups in its Community Standards,“Objectionable Content” Facebook Community Standards, Facebook, accessed November 14, 2018, https://www.facebook.com/communitystandards/objectionable_content. Facebook appears to have a reactive approach to removing neo-Nazi and white supremacist content from its platform. For example, Facebook deactivated a page belonging to the Traditionalist Workers Party, a neo-Nazi group, only after it was revealed that the group participated in the 2017 Charlottesville Unite the Right rally. The page was reported to the company prior to the event.Julia Carrie Wong, “A year after Charlottesville, why can’t big tech delete white supremacists?” Guardian (London), July 25, 2018, https://www.theguardian.com/world/2018/jul/25/charlottesville-white-supremacists-big-tech-failure-remove. In another instance, a Huffington Post investigation in summer 2018 found that four neo-Nazi and white supremacist clothing stores were able to operate on Facebook. The tech giant finally removed the pages once it was faced with negative press.Nick Robins-Early, “Facebook and Instagram let neo-Nazis run clothing brands on their platforms,” The Huffington Post, August 2, 2018, https://www.huffingtonpost.com/entry/facebook-nazi-clothing-extremism_us_5b5b5cb3e4b0fd5c73cf2986. Despite seeing astronomical revenues of approximately $40.7 billion in 2017, Facebook is failing to enforce its own Community Standards in a proactive manner.“Facebook’s annual revenue and net income from 2007 to 2017 (in million U.S. dollars),” Statista, accessed January 25, 2019, https://www.statista.com/statistics/277229/facebooks-annual-revenue-and-net-income/.

In September 2018, the Counter Extremism Project (CEP) identified and monitored a small selection of 40 Facebook pages belonging to online stores that sell white supremacist clothing, music, or accessories, or white supremacist or neo-Nazi groups. Pages were located through searches for known white supremacist or neo-Nazi keywords. CEP researchers recorded information for each page such as the number of likes, date of creation, and examples of white supremacist or neo-Nazi content. After two months, CEP reported the pages—only 35 of the 40 remained online—to Facebook and found that only four pages were ultimately removed. Clearly, Facebook’s process for reviewing and removing this content-which violates its own Community Standards-is inadequate.

What Content is Allowed on Facebook?

Facebook is a private company that is able to restrict certain types of content that does not fit its mission statement, business model, or ethos.“Terms of Service,” Facebook, April 19, 2018, https://www.facebook.com/terms.php. The site’s Community Standards state that Facebook prohibits organizations or individuals involved in “organized hate” from maintaining a Facebook presence, as well as content “that expresses support or praise for groups, leaders, or individuals involved in these activities.”“Objectionable Content” Facebook Community Standards, Facebook, accessed November 14, 2018, https://www.facebook.com/communitystandards/objectionable_content. Facebook defines a hate organization as:

Any association of three or more people that is organized under a name, sign, or symbol and that has an ideology, statements, or physical actions that attack individuals based on characteristics, including race, religious affiliation, nationality, ethnicity, gender, sex, sexual orientation, serious disease or disability.“Objectionable Content” Facebook Community Standards, Facebook, accessed November 14, 2018, https://www.facebook.com/communitystandards/objectionable_content.

An unidentified Facebook spokesperson further clarified their policies towards hate groups on their platform, telling a journalist, "It doesn’t matter whether these groups are posting hateful messages or whether they’re sharing pictures of friends and family…as organized hate groups, they have no place on our platform."“Julia Carrie Wong, “A year after Charlottesville, why can’t big tech delete white supremacists?” Guardian (London), July 25, 2018, https://www.theguardian.com/world/2018/jul/25/charlottesville-white-supremacists-big-tech-failure-remove.

Key Findings

After the two-month period, CEP reported the 35 pages that were still online for violating the site’s Community Standards. Following reporting, Facebook claimed that six out of 35 (17%) were removed, but when CEP looked to confirm removal of these pages, only three of the six (9% of the total reported pages) were actually removed. Bizarrely, these three pages were not deleted, despite Facebook’s claim that they were removed for hate speech.

Separately, CEP found that one page belonging to the white supremacist group, the Scottish Nationalist Society, was either removed by Facebook employees or the page administrators. This was done despite Facebook claiming that the page did not violate policies regarding hate speech and would not be removed after CEP reported the page.

Over a two-month period, CEP found that a small selection of 35 Facebook pages belonging to businesses or groups that support white supremacism or neo-Nazism increased in popularity, growing by at least a total of 2,366 likes.

Overall, CEP observed that an overwhelming majority of neo-Nazi and white supremacist pages—31 out of 35 pages—that were reported to Facebook for violating its Community Standards regarding hate speech and organized hate were permitted to remain on the platform.

Facebook also said that it removed content from two pages—the white supremacist online store Angry White Boy and the white supremacist group Be Active Front—but allowed the pages themselves to remain online. This is especially troubling given that these pages clearly violated the platform’s policy on hate speech and organized hate.

Methodology and Scope

Search Procedure and Data Collection

Searches for Facebook pages were conducted on the platform using keywords associated with various white supremacist groups and far-right ideologies. Examples of keywords included the names of known white supremacist groups, bands and record labels, and established white supremacist slogans such as “white pride worldwide.” In some cases, additional pages were located after being recommended as “related pages” by Facebook or by viewing pages “liked” by another page.

Once a neo-Nazi white supremacist or far-right Facebook page was located, CEP researchers recorded key data, such as date of creation and the number of likes. One month after the original data was taken, the pages were revisited in order to record the change in the number of likes.

A Facebook page was also classified as: active (if it had an administrator post within the past month), semi-active (if it had an administrator post within the past three months), or inactive (if the most recent post was more than three months old). Inactive pages are not necessarily obsolete. It is possible that the page’s administrator may still respond to private Facebook messages, individuals may still locate other people interested in white supremacist activities, and users could still use the page to locate propaganda.

What Facebook pages were included?

Facebook pages were included if they sold white supremacist clothing, accessories, music, or merchandise portraying bands that promote white supremacy.Some pages had an active “store” function on Facebook with links to purchase items on a separate website. Other pages posted photos of merchandise with information or links on how to purchase the items. Facebook band pages for white supremacist bands were not included in this report. In several cases, examples of white supremacist content are dependent on codes, symbols, or knowledge of the white supremacist/neo-Nazi punk, hardcore, or metal music scenes. This is especially the case regarding merchandise intended for a European audience, where certain symbols such as the Nazi swastika might be banned. Evidence of white supremacist merchandise was also recorded.See Appendix 1.

In addition, Facebook pages for white supremacist groups were included. In some cases, group pages did not post specific content related to the group such as propaganda, events, membership rules, etc. Even in the absence of such information, these pages were included because they are still forbidden under Facebook’s Community Standards and because the pages provide a forum for supporters to communicate, as well as a way for individuals to signal their group affiliation.It is also possible that non-public conversations occurred between group members in private posts, or private chats.

Measuring Audience

Pages were measured by their number of “likes.” Liking pages allows Facebook users to signal their tastes, personal preferences, and politics, while also allowing the user to see page updates and gain access to comment sections. While pages may increase in likes due to the rising popularity of a group or brand, a page might decrease in likes due to the posting of uninteresting content or the deletion of personal Facebook accounts.

Reporting

After monitoring these select 40 Facebook pages between September and November, CEP observed that five were removed within that period. After the two-month period, CEP reported the remaining 35 Facebook pages for removal for violating the site’s Community Standards. Following CEP’s reporting, pages were examined one day later and then one week later to determine which pages were taken down, if at all.

well-known and influential neo-Nazi skinhead bands.

Data

Data Summary

- Between September and November and prior to CEP reporting all pages to Facebook for removal, only five out of 40 pages were removed by the tech company for violating its Community Standards or were made inaccessible.

- After the two-month period, CEP found that of the 35 remaining pages that increased in likes, the audience increased by a total of 2,366 likes, and pages that decreased in likes were reduced by a mere 28 likes.

- Active pages—which had a post within the past month—grew 73 times faster than inactive pages—with the most recent post being more than three months old. Still, nearly half of inactive pages continue to increase in audience size, despite the lack of activity by page administrators.

Data

On September 7, 2018, CEP identified and collected data on a small sample of 40 neo-Nazi or white supremacist Facebook pages. Of those 40 pages, 18 pages were for online businesses that sold merchandise or music, and 22 pages were for groups or organizations. Two months later on November 7, CEP revisited each of the 40 pages to analyze any changes to page and to measure audience size.

September data for 18 stores selling white supremacist clothing, music, or accessories:

| Number of active pages: | 11 |

| Number of semi-active pages: | 2 |

| Number of inactive pages: | 5 |

| Smallest number of likes: | 83 |

| Largest number of likes: | 17,422 |

| Average number of likes: | 2,240 |

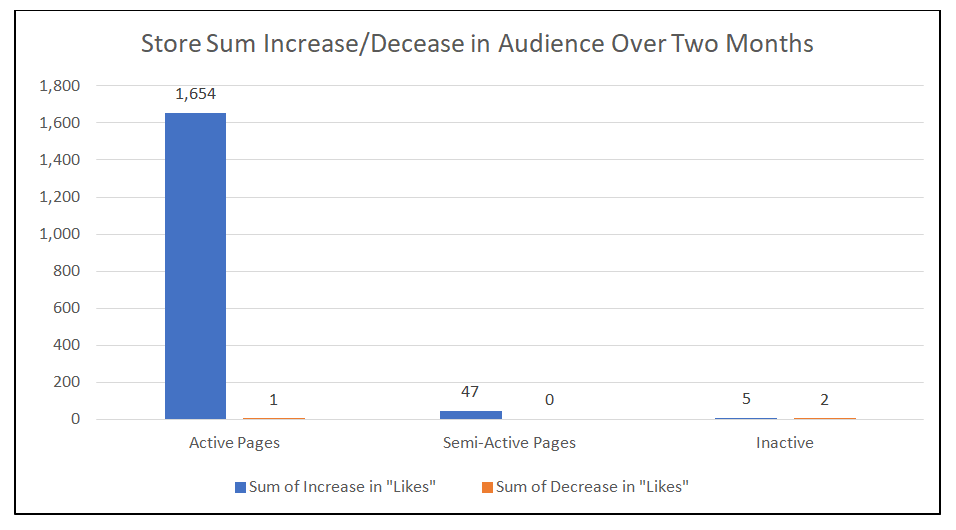

On November 7, CEP revisited these 18 pages and observed:

| Audience change for 11 pages active in September: | Nine pages increased by a total of 1,654 likes. One saw no change in likes. One page decreased by one like. |

| Audience change for two pages semi-active in September: | Two pages increased by a total of 47 likes. |

| Audience change for five pages inactive in September: | One page increased by five likes. One page decreased by two likes. Three pages were either deleted by Facebook or were inaccessible.One Facebook page was inaccessible in early November but returned to active status later that same month. However, since it was inaccessible on November 7, it is classified as “inactive.” |

| Smallest number of likes (November): | 84 |

| Largest number of likes (November): | 5,844Three pages were deleted or were inaccessible in early November. |

| Average number of likes (November): | 1,560 |

September data for 22 white supremacist organizations and groups:

| Number of active pages: | 12 |

| Number of semi-active pages: | 1 |

| Number of inactive pages: | 9 |

| Smallest number of likes: | 14 |

| Largest number of likes: | 1,608 |

| Average number of likes: | 465 |

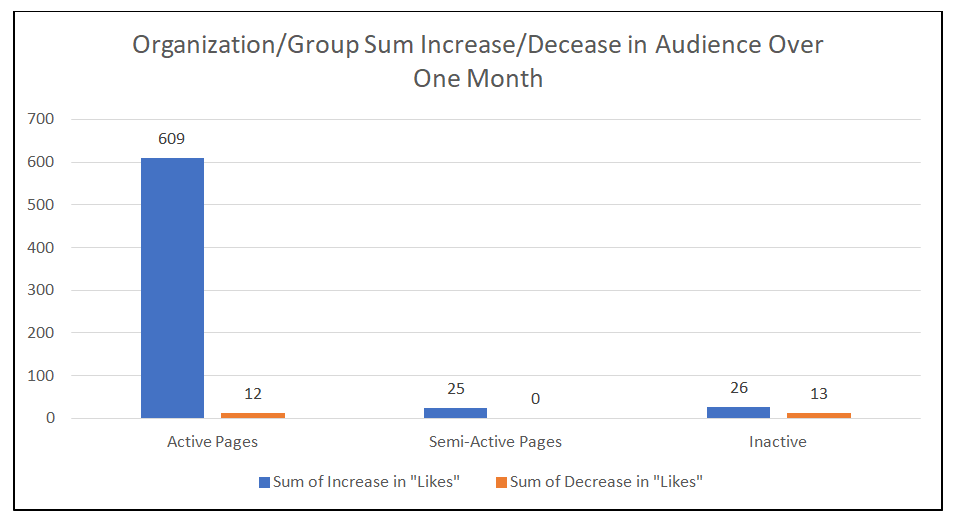

On November 7, CEP revisited these 22 pages and observed:

| Audience change for 12 pages active in September: | Nine pages increased by a total of 609 likes. Three pages decreased by a total of 12 likes. |

| Audience change for one page semi-active in September: | One page grew by 25 likes. |

| Audience change for nine pages inactive in September: | Three pages grew by a total of 26 likes. One page saw no change in likes. Three pages decreased by total of 13 likes. Two pages were either deleted by Facebook or were inaccessible. |

| Smallest number of likes (November): | 19 |

| Largest number of likes (November): | 1,633 |

| Average number of likes (November): | 541 |

Reporting

After the two-month data collection and analysis period, CEP reported the remaining 35 of the original 40 pages to Facebook for removal for violating the site’s Community Standards on hate speech.One Facebook page was suspended on November 7, but was reactivated when pages were reported in November. After the two-month data collection period when CEP reported all pages to Facebook, five pages had already either been removed by Facebook or the page administrators. For a complete list of justifications for removal as provided by Facebook to CEP, see Appendix 1. Following reporting, Facebook claimed that six out of 35 (17%) were removed, but when CEP looked to confirm removal of these pages, only three of the six (9% of the total reported pages) were actually removed.An additional page was later removed by Facebook several days after being reported by CEP. However, Facebook stated that the page did not violate their Community Standards. The removal of this page brought the total number of removed pages to four. Bizarrely, these three pages were not deleted, despite Facebook’s claim that they were removed for hate speech.Pages were re-checked the day after receiving reporting messages from Facebook and then rechecked one week later. In all three cases, Facebook claimed that “We removed the Page you reported,” because “it violated our Community Standards.”Facebook message, see Appendix 2. The company did not clarify or explain why these pages remained online.

Additionally, separately from the six pages Facebook claimed to have removed, CEP found that one page belonging to the white supremacist group, the Scottish Nationalist Society, was either removed by Facebook employees or the page administrators. This was done despite Facebook claiming that the page did not violate policies regarding hate speech and would not be removed after CEP reported the page. The removal of Scottish Nationalist Society’s Facebook page reveals that only four out of 35 reported pages were ultimately taken down.

After CEP reported the remaining 35 pages to Facebook, the social media company claimed that it removed an unidentified quantity of content from two pages—the white supremacist online store Angry White Boy and the white supremacist group Be Active Front—but allowed the pages themselves to remain online. This is especially troubling given that these pages clearly violates the platform’s policy on hate speech and organized hate. Additionally, Facebook did not specify what content was removed for violating its Community Standards or explain why it allowed these pages to remain on the platform, which is indicative of the company’s failure to be transparent about its content and account removal process.

Overall, CEP observed that an overwhelming majority of neo-Nazi and white supremacist pages—31 out of 35 pages—that were reported to Facebook for violating its Community Standards regarding hate speech and organized hate were not removed.The four pages removed include three pages that Facebook stated were removed, plus an additional page, the Scottish Nationalist Society, that Facebook said they would not remove, but was in fact, removed. All pages reported by CEP belong either to online businesses that offer products that are in violation of Facebook’s policies on hate speech, or belong to groups that violate the platform’s policies on organized hate groups.

Conclusion

Facebook’s inability to remove white supremacist and neo-Nazi stores and groups consistently and transparently demonstrates that the firm is failing to enforce its own Community Standards. Moreover, its reporting mechanisms and process is nowhere near sufficient. A company’s Terms of Service and Community Standards are only as strong as a platform’s willingness to enforce them.

Of the 40 Facebook pages belonging to white supremacist and neo-Nazi stores and groups identified in September 2018, 35 pages were still online in November 2018. These pages saw an increase of 2,366 likes over those two months. Even after CEP reported the remaining 35 pages to Facebook, only four pages were removed. This meant that Facebook decided to allow 12 businesses that sell merchandise that promotes white supremacist and neo-Nazi themes, and 19 groups that endorse white supremacist and neo-Nazi ideology exist on its platform.

When neo-Nazi groups that clearly violate the platform’s policies are allowed to maintain pages, it raises serious questions regarding Facebook’s commitment to their own policies. The failure to remove content that clearly violates the platform’s policies on hate speech and hate groups indicates a lack of awareness or lack of desire to remove harmful content. Either case is unacceptable and demands action in order to prevent groups that actively promote racism, xenophobia, homophobia, anti-Semitism, and Islamophobia.

It is not enough for Facebook to release misleading statements filled with apologies, empty promises to improve policies and practices, and a simple listing of the quantity of content that they have removed. Facebook must be held accountable for failing to take a much more proactive approach towards removing content and accounts that violate their Community Standards, and thoroughly investigate reported pages.

Recommendations

CEP proposes several recommendations in order for Facebook to prevent white supremacists and neo-Nazis from profiting from the sale of merchandise on Facebook and maintaining group pages on the site.

Training content reviewers: Facebook should make sure that their content reviewers receive adequate training on white supremacist and neo-Nazi movements and organizations. Content reviewers must have specialized knowledge of the symbols and codes those groups and individuals use, as well as the ways they operate online.

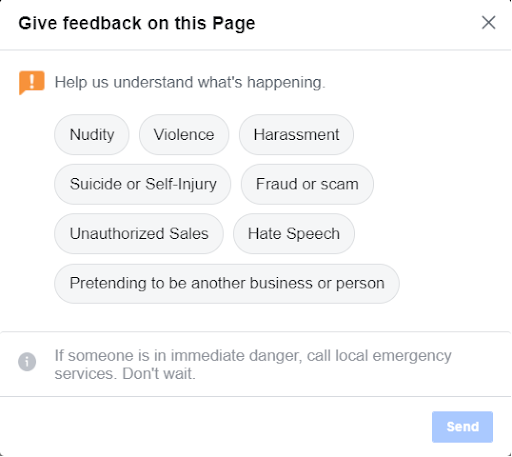

Easy and clear reporting process: Facebook should make it easier for Facebook users to report pages for specific Community Standards violations, such as being a hate group. At the time of this writing, the reasons available for reporting a page do not include the wide variety of potential Community Standards violations. Facebook should also allow users to include several sentences regarding why they are reporting a page. This would permit users to include useful information for content reviewers, such as description of a group’s ideology, the meaning of a particular symbol, or prior acts of violence. Furthermore, pages belonging to white supremacy or neo-Nazi groups reported to Facebook content reviewers should be given extra scrutiny because content posted on the page itself might not directly or explicitly reference extremist ideology or violent acts.

Increase transparency: Facebook should improve transparency regarding their content removal policies. In particular, Facebook should provide clear reasons and explanations for why in certain cases it claims to have removed content but that content has remained.